Dense object counting with YOLO variants on the CARPK dataset

Authors

- Lu Xu

- Mohammad Rezaei

Dataset

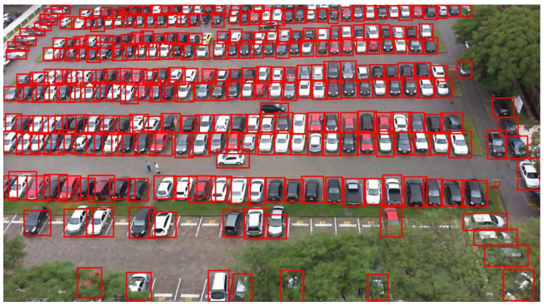

We use the CARPK dataset (1,448 high-resolution drone images, 89,774 car boxes) with predefined train/test splits and an additional validation split (20% of train). We convert annotations to COCO and then YOLO formats for training.

- Train: 791 images

- Val: 198 images

- Test: 459 images

Metrics & Fitness

- Detection: Precision, Recall, IoU, mAP@0.5 / mAP@0.5:0.95

- Counting: MAE, RMSE

- Fitness (for HPO):

[S = 0.4\cdot S_{mAP@0.5} + 0.3\cdot \frac{1}{1+\text{MAE}} + 0.3\cdot \frac{1}{1+\text{RMSE}}]

This nudges the search toward lower counting error while preserving detection quality.

Model(s)

This work investigates YOLOv3, YOLOv5, and YOLOv8 for object detection and dense object counting on the CARPK drone dataset. We train baselines, run hyperparameter evolution, and evaluate both standard detection metrics (Precision/Recall/mAP/IoU) and counting metrics (MAE, RMSE). We also define a custom fitness score that balances detection and counting performance during HPO.

Results

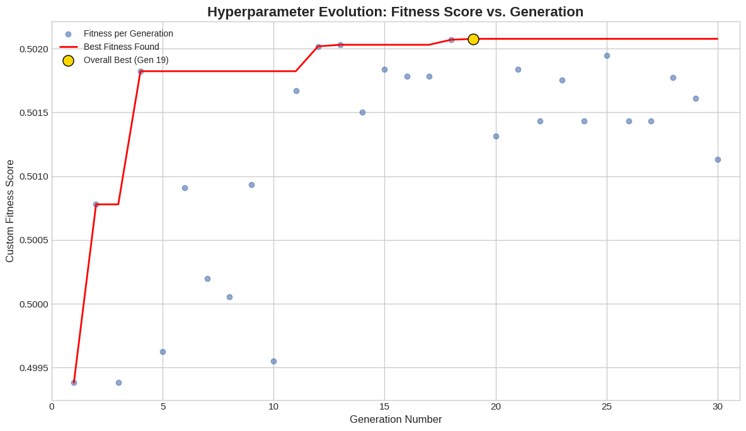

Hyperparameter evolution

The results of the hyperparameter evolution for the YOLOv5 model are presented below as a representative example:

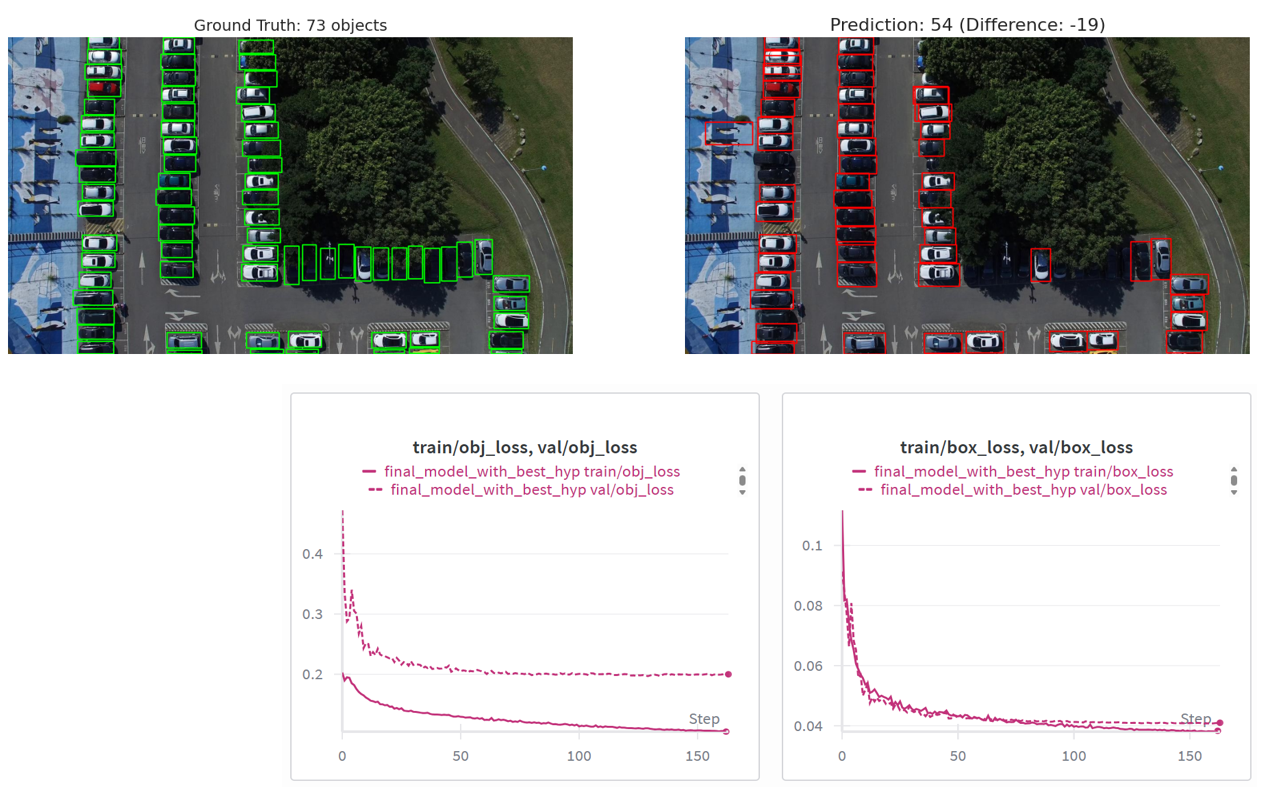

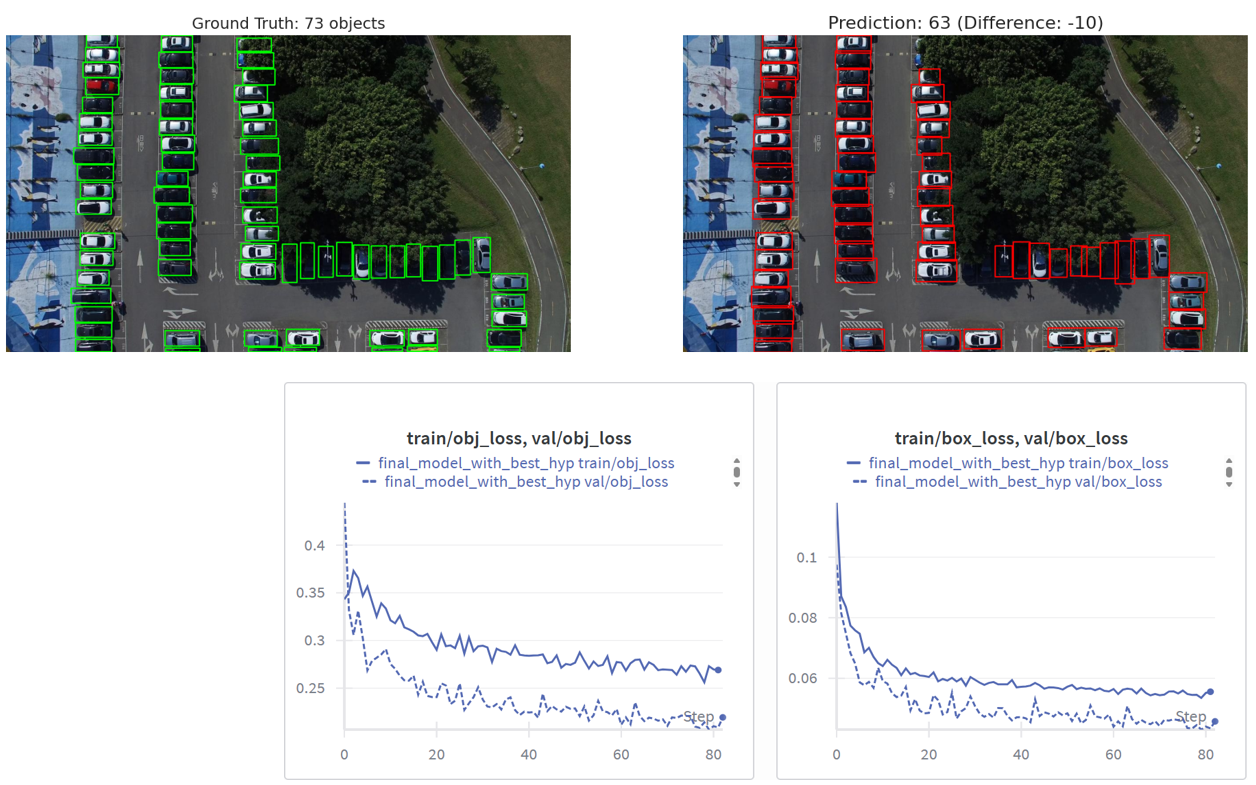

Impact of data augmentation

Weak augmentation:

Strong augmentation:

Models performance

The table below compares the counting performance of YOLO models against the initial benchmark.

| Metric | Benchmark | YOLOv3 | YOLOv5 | YOLOv8 |

|---|---|---|---|---|

| Test MAE | 23.80 | 24.01 | 4.84 | 4.65 |

| Test RMSE | 36.79 | 30.37 | 6.50 | 6.41 |